Kubernetes Blog The Kubernetes blog is used by the project to communicate new features, community reports, and any news that might be relevant to the Kubernetes community.

-

Before You Migrate: Five Surprising Ingress-NGINX Behaviors You Need to Know

on February 27, 2026 at 3:30 pm

As announced November 2025, Kubernetes will retire Ingress-NGINX in March 2026. Despite its widespread usage, Ingress-NGINX is full of surprising defaults and side effects that are probably present in your cluster today. This blog highlights these behaviors so that you can migrate away safely and make a conscious decision about which behaviors to keep. This post also compares Ingress-NGINX with Gateway API and shows you how to preserve Ingress-NGINX behavior in Gateway API. The recurring risk pattern in every section is the same: a seemingly correct translation can still cause outages if it does not consider Ingress-NGINX’s quirks. I’m going to assume that you, the reader, have some familiarity with Ingress-NGINX and the Ingress API. Most examples use httpbin as the backend. Also, note that Ingress-NGINX and NGINX Ingress are two separate Ingress controllers. Ingress-NGINX is an Ingress controller maintained and governed by the Kubernetes community that is retiring March 2026. NGINX Ingress is an Ingress controller by F5. Both use NGINX as the dataplane, but are otherwise unrelated. From now on, this blog post only discusses Ingress-NGINX. 1. Regex matches are prefix-based and case insensitive Suppose that you wanted to route all requests with a path consisting of only three uppercase letters to the httpbin service. You might create the following Ingress with the nginx.ingress.kubernetes.io/use-regex: “true” annotation and the regex pattern of /[A-Z]{3}. apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: regex-match-ingress annotations: nginx.ingress.kubernetes.io/use-regex: “true” spec: ingressClassName: nginx rules: – host: regex-match.example.com http: paths: – path: “/[A-Z]{3}” pathType: ImplementationSpecific backend: service: name: httpbin port: number: 8000 However, because regex matches are prefix and case insensitive, Ingress-NGINX routes any request with a path that starts with any three letters to httpbin: curl -sS -H “Host: regex-match.example.com” http://<your-ingress-ip>/uuid The output is similar to: { “uuid”: “e55ef929-25a0-49e9-9175-1b6e87f40af7” } Note: The /uuid endpoint of httpbin returns a random UUID. A UUID in the response body means that the request was successfully routed to httpbin. With Gateway API, you can use an HTTP path match with a type of RegularExpression for regular expression path matching. RegularExpression matches are implementation specific, so check with your Gateway API implementation to verify the semantics of RegularExpression matching. Popular Envoy-based Gateway API implementations such as Istio1, Envoy Gateway, and Kgateway do a full case-sensitive match. Thus, if you are unaware that Ingress-NGINX patterns are prefix and case-insensitive, and, unbeknownst to you, clients or applications send traffic to /uuid (or /uuid/some/other/path), you might create the following HTTP route. apiVersion: gateway.networking.k8s.io/v1 kind: HTTPRoute metadata: name: regex-match-route spec: hostnames: – regex-match.example.com parentRefs: – name: <your gateway> # Change this depending on your use case rules: – matches: – path: type: RegularExpression value: “/[A-Z]{3}” backendRefs: – name: httpbin port: 8000 However, if your Gateway API implementation does full case-sensitive matches, the above HTTP route would not match a request with a path of /uuid. The above HTTP route would thus cause an outage because requests that Ingress-NGINX routed to httpbin would fail with a 404 Not Found at the gateway. To preserve the case-insensitive regex matching, you can use the following HTTP route. apiVersion: gateway.networking.k8s.io/v1 kind: HTTPRoute metadata: name: regex-match-route spec: hostnames: – regex-match.example.com parentRefs: – name: <your gateway> # Change this depending on your use case rules: – matches: – path: type: RegularExpression value: “/[a-zA-Z]{3}.*” backendRefs: – name: httpbin port: 8000 Alternatively, the aforementioned proxies support the (?i) flag to indicate case insensitive matches. Using the flag, the pattern could be (?i)/[a-z]{3}.*. 2. The nginx.ingress.kubernetes.io/use-regex applies to all paths of a host across all (Ingress-NGINX) Ingresses Now, suppose that you have an Ingress with the nginx.ingress.kubernetes.io/use-regex: “true” annotation, but you want to route requests with a path of exactly /headers to httpbin. Unfortunately, you made a typo and set the path to /Header instead of /headers. — apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: regex-match-ingress annotations: nginx.ingress.kubernetes.io/use-regex: “true” spec: ingressClassName: nginx rules: – host: regex-match.example.com http: paths: – path: “<some regex pattern>” pathType: ImplementationSpecific backend: service: name: <your backend> port: number: 8000 — apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: regex-match-ingress-other spec: ingressClassName: nginx rules: – host: regex-match.example.com http: paths: – path: “/Header” # typo here, should be /headers pathType: Exact backend: service: name: httpbin port: number: 8000 Most would expect a request to /headers to respond with a 404 Not Found, since /headers does not match the Exact path of /Header. However, because the regex-match-ingress Ingress has the nginx.ingress.kubernetes.io/use-regex: “true” annotation and the regex-match.example.com host, all paths with the regex-match.example.com host are treated as regular expressions across all (Ingress-NGINX) Ingresses. Since regex patterns are case-insensitive prefix matches, /headers matches the /Header pattern and Ingress-NGINX routes such requests to httpbin. Running the command curl -sS -H “Host: regex-match.example.com” http://<your-ingress-ip>/headers the output looks like: { “headers”: { … } } Note: The /headers endpoint of httpbin returns the request headers. The fact that the response contains the request headers in the body means that the request was successfully routed to httpbin. Gateway API does not silently convert or interpret Exact and Prefix matches as regex patterns. So if you converted the above Ingresses into the following HTTP route and preserved the typo and match types, requests to /headers will respond with a 404 Not Found instead of a 200 OK. apiVersion: gateway.networking.k8s.io/v1 kind: HTTPRoute metadata: name: regex-match-route spec: hostnames: – regex-match.example.com rules: … – matches: – path: type: Exact value: “/Header” backendRefs: – name: httpbin port: 8000 To keep the case-insensitive prefix matching, you can change – matches: – path: type: Exact value: “/Header” to – matches: – path: type: RegularExpression value: “(?i)/Header” Or even better, you could fix the typo and change the match to – matches: – path: type: Exact value: “/headers” 3. Rewrite target implies regex In this case, suppose you want to rewrite the path of requests with a path of /ip to /uuid before routing them to httpbin, and as in Section 2, you want to route requests with the path of exactly /headers to httpbin. However, you accidentally make a typo and set the path to /IP instead of /ip and /Header instead of /headers. — apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: rewrite-target-ingress annotations: nginx.ingress.kubernetes.io/rewrite-target: “/uuid” spec: ingressClassName: nginx rules: – host: rewrite-target.example.com http: paths: – path: “/IP” pathType: Exact backend: service: name: httpbin port: number: 8000 — apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: rewrite-target-ingress-other spec: ingressClassName: nginx rules: – host: rewrite-target.example.com http: paths: – path: “/Header” pathType: Exact backend: service: name: httpbin port: number: 8000 The nginx.ingress.kubernetes.io/rewrite-target: “/uuid” annotation causes requests that match paths in the rewrite-target-ingress Ingress to have their paths rewritten to /uuid before being routed to the backend. Even though no Ingress has the nginx.ingress.kubernetes.io/use-regex: “true” annotation, the presence of the nginx.ingress.kubernetes.io/rewrite-target annotation in the rewrite-target-ingress Ingress causes all paths with the rewrite-target.example.com host to be treated as regex patterns. In other words, the nginx.ingress.kubernetes.io/rewrite-target silently adds the nginx.ingress.kubernetes.io/use-regex: “true” annotation, along with all the side effects discussed above. For example, a request to /ip has its path rewritten to /uuid because /ip matches the case-insensitive prefix pattern of /IP in the rewrite-target-ingress Ingress. After running the command curl -sS -H “Host: rewrite-target.example.com” http://<your-ingress-ip>/ip the output is similar to: { “uuid”: “12a0def9-1adg-2943-adcd-1234aadfgc67” } Like in the nginx.ingress.kubernetes.io/use-regex example, Ingress-NGINX treats paths of other ingresses with the rewrite-target.example.com host as case-insensitive prefix patterns. Running the command curl -sS -H “Host: rewrite-target.example.com” http://<your-ingress-ip>/headers gives an output that looks like { “headers”: { … } } You can configure path rewrites in Gateway API with the HTTP URL rewrite filter which does not silently convert your Exact and Prefix matches into regex patterns. However, if you are unaware of the side effects of the nginx.ingress.kubernetes.io/rewrite-target annotation and do not realize that /Header and /IP are both typos, you might create the following HTTP route. apiVersion: gateway.networking.k8s.io/v1 kind: HTTPRoute metadata: name: rewrite-target-route spec: hostnames: – rewrite-target.example.com parentRefs: – name: <your-gateway> rules: – matches: – path: type: Exact value: “/IP” filters: – type: URLRewrite urlRewrite: path: type: ReplaceFullPath replaceFullPath: /uuid backendRefs: – name: httpbin port: 8000 – matches: – path: # This is an exact match, irrespective of other rules type: Exact value: “/Header” backendRefs: – name: httpbin port: 8000 As with Section 2, because /IP is now an Exact match type in your HTTP route, requests to /ip will respond with a 404 Not Found instead of a 200 OK. Similarly, requests to /headers will also respond with a 404 Not Found instead of a 200 OK. Thus, this HTTP route will break applications and clients that rely on the /ip and /headers routes. To fix this, you can change the matches in the HTTP route to be regex matches, and change the path patterns to be case-insensitive prefix matches, as follows. – matches: – path: type: RegularExpression value: “(?i)/IP.*” … – matches: – path: type: RegularExpression value: “(?i)/Header.*” Or, you can keep the Exact match type and fix the typos. 4. Requests missing a trailing slash are redirected to the same path with a trailing slash Consider the following Ingress: apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: trailing-slash-ingress spec: ingressClassName: nginx rules: – host: trailing-slash.example.com http: paths: – path: “/my-path/” pathType: Exact backend: service: name: <your-backend> port: number: 8000 You might expect Ingress-NGINX to respond to /my-path with a 404 Not Found since the /my-path does not exactly match the Exact path of /my-path/. However, Ingress-NGINX redirects the request to /my-path/ with a 301 Moved Permanently because the only difference between /my-path and /my-path/ is a trailing slash. curl -isS -H “Host: trailing-slash.example.com” http://<your-ingress-ip>/my-path The output looks like: HTTP/1.1 301 Moved Permanently … Location: http://trailing-slash.example.com/my-path/ … The same applies if you change the pathType to Prefix. However, the redirect does not happen if the path is a regex pattern. Conformant Gateway API implementations do not silently configure any kind of redirects. If clients or downstream services depend on this redirect, a migration to Gateway API that does not explicitly configure request redirects will cause an outage because requests to /my-path will now respond with a 404 Not Found instead of a 301 Moved Permanently. You can explicitly configure redirects using the HTTP request redirect filter as follows: apiVersion: gateway.networking.k8s.io/v1 kind: HTTPRoute metadata: name: trailing-slash-route spec: hostnames: – trailing-slash.example.com parentRefs: – name: <your-gateway> rules: – matches: – path: type: Exact value: “/my-path” filters: requestRedirect: statusCode: 301 path: type: ReplaceFullPath replaceFullPath: /my-path/ – matches: – path: type: Exact # or Prefix value: “/my-path/” backendRefs: – name: <your-backend> port: 8000 5. Ingress-NGINX normalizes URLs URL normalization is the process of converting a URL into a canonical form before matching it against Ingress rules and routing it. The specifics of URL normalization are defined in RFC 3986 Section 6.2, but some examples are removing path segments that are just a .: my/./path -> my/path having a .. path segment remove the previous segment: my/../path -> /path deduplicating consecutive slashes in a path: my//path -> my/path Ingress-NGINX normalizes URLs before matching them against Ingress rules. For example, consider the following Ingress: apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: path-normalization-ingress spec: ingressClassName: nginx rules: – host: path-normalization.example.com http: paths: – path: “/uuid” pathType: Exact backend: service: name: httpbin port: number: 8000 Ingress-NGINX normalizes the path of the following requests to /uuid. Now that the request matches the Exact path of /uuid, Ingress-NGINX responds with either a 200 OK response or a 301 Moved Permanently to /uuid. For the following commands curl -sS -H “Host: path-normalization.example.com” http://<your-ingress-ip>/uuid curl -sS -H “Host: path-normalization.example.com” http://<your-ingress-ip>/ip/abc/../../uuid curl -sSi -H “Host: path-normalization.example.com” http://<your-ingress-ip>////uuid the outputs are similar to { “uuid”: “29c77dfe-73ec-4449-b70a-ef328ea9dbce” } { “uuid”: “d20d92e8-af57-4014-80ba-cf21c0c4ffae” } HTTP/1.1 301 Moved Permanently … Location: /uuid … Your backends might rely on the Ingress/Gateway API implementation to normalize URLs. That said, most Gateway API implementations will have some path normalization enabled by default. For example, Istio, Envoy Gateway, and Kgateway all normalize . and .. segments out of the box. For more details, check the documentation for each Gateway API implementation that you use. Conclusion As we all race to respond to the Ingress-NGINX retirement, I hope this blog post instills some confidence that you can migrate safely and effectively despite all the intricacies of Ingress-NGINX. SIG Network has also been working on supporting the most common Ingress-NGINX annotations (and some of these unexpected behaviors) in Ingress2Gateway to help you translate Ingress-NGINX configuration into Gateway API, and offer alternatives to unsupported behavior. SIG Network released Gateway API 1.5 earlier today (27th February 2026), which graduates features such as ListenerSet (that allow app developers to better manage TLS certificates), and the HTTPRoute CORS filter that allows CORS configuration. You can use Istio purely as Gateway API controller with no other service mesh features. ↩︎

-

Spotlight on SIG Architecture: API Governance

on February 12, 2026 at 12:00 am

This is the fifth interview of a SIG Architecture Spotlight series that covers the different subprojects, and we will be covering SIG Architecture: API Governance. In this SIG Architecture spotlight we talked with Jordan Liggitt, lead of the API Governance sub-project. Introduction FM: Hello Jordan, thank you for your availability. Tell us a bit about yourself, your role and how you got involved in Kubernetes. JL: My name is Jordan Liggitt. I’m a Christian, husband, father of four, software engineer at Google by day, and amateur musician by stealth. I was born in Texas (and still like to claim it as my point of origin), but I’ve lived in North Carolina for most of my life. I’ve been working on Kubernetes since 2014. At that time, I was working on authentication and authorization at Red Hat, and my very first pull request to Kubernetes attempted to add an OAuth server to the Kubernetes API server. It never exited work-in-progress status. I ended up going with a different approach that layered on top of the core Kubernetes API server in a different project (spoiler alert: this is foreshadowing), and I closed it without merging six months later. Undeterred by that start, I stayed involved, helped build Kubernetes authentication and authorization capabilities, and got involved in the definition and evolution of the core Kubernetes APIs from early beta APIs, like v1beta3 to v1. I got tagged as an API reviewer in 2016 based on those contributions, and was added as an API approver in 2017. Today, I help lead the API Governance and code organization subprojects for SIG Architecture, and I am a tech lead for SIG Auth. FM: And when did you get specifically involved in the API Governance project? JL: Around 2019. Goals and scope of API Governance FM: How would you describe the main goals and areas of intervention of the subproject? The surface area includes all the various APIs Kubernetes has, and there are APIs that people do not always realize are APIs: command-line flags, configuration files, how binaries are run, how they talk to back-end components like the container runtime, and how they persist data. People often think of “the API” as only the REST API… that is the biggest and most obvious one, and the one with the largest audience, but all of these other surfaces are also APIs. Their audiences are narrower, so there is more flexibility there, but they still require consideration. The goals are to be stable while still enabling innovation. Stability is easy if you never change anything, but that contradicts the goal of evolution and growth. So we balance “be stable” with “allow change”. FM: Speaking of changes, in terms of ensuring consistency and quality (which is clearly one of the reasons this project exists), what are the specific quality gates in the lifecycle of a Kubernetes change? Does API Governance get involved during the release cycle, prior to it through guidelines, or somewhere in between? At what points do you ensure the intended role is fulfilled? JL: We have guidelines and conventions, both for APIs in general and for how to change an API. These are living documents that we update as we encounter new scenarios. They are long and dense, so we also support them with involvement at either the design stage or the implementation stage. Sometimes, due to bandwidth constraints, teams move ahead with design work without feedback from API Review. That’s fine, but it means that when implementation begins, the API review will happen then, and there may be substantial feedback. So we get involved when a new API is created or an existing API is changed, either at design or implementation. FM: Is this during the Kubernetes Enhancement Proposal (KEP) process? Since KEPs are mandatory for enhancements, I assume part of the work intersects with API Governance? JL: It can. KEPs vary in how detailed they are. Some include literal API definitions. When they do, we can perform an API review at the design stage. Then implementation becomes a matter of checking fidelity to the design. Getting involved early is ideal. But some KEPs are conceptual and leave details to the implementation. That’s not wrong; it just means the implementation will be more exploratory. Then API Review gets involved later, possibly recommending structural changes. There’s a trade-off regardless: detailed design upfront versus iterative discovery during implementation. People and teams work differently, and we’re flexible and happy to consult early or at implementation time. FM: This reminds me of what Fred Brooks wrote in “The Mythical Man-Month” about conceptual integrity being central to product quality… No matter how you structure the process, there must be a point where someone looks at what is coming and ensures conceptual integrity. Kubernetes uses APIs everywhere — externally and internally — so API Governance is critical to maintaining that integrity. How is this captured? JL: Yes, the conventions document captures patterns we’ve learned over time: what to do in various situations. We also have automated linters and checks to ensure correctness around patterns like spec/status semantics. These automated tools help catch issues even when humans miss them. As new scenarios arise — and they do constantly — we think through how to approach them and fold the results back into our documentation and tools. Sometimes it takes a few attempts before we settle on an approach that works well. FM: Exactly. Each new interaction improves the guidelines. JL: Right. And sometimes the first approach turns out to be wrong. It may take two or three iterations before we land on something robust. The impact of Custom Resource Definitions FM: Is there any particular change, episode, or domain that stands out as especially noteworthy, complex, or interesting in your experience? JL: The watershed moment was Custom Resources. Prior to that, every API was handcrafted by us and fully reviewed. There were inconsistencies, but we understood and controlled every type and field. When Custom Resources arrived, anyone could define anything. The first version did not even require a schema. That made it extremely powerful — it enabled change immediately — but it left us playing catch-up on stability and consistency. When Custom Resources graduated to General Availability (GA), schemas became required, but escape hatches still existed for backward compatibility. Since then, we’ve been working on giving CRD authors validation capabilities comparable to built-ins. Built-in validation rules for CRDs have only just reached GA in the last few releases. So CRDs opened the “anything is possible” era. Built-in validation rules are the second major milestone: bringing consistency back. The three major themes have been defining schemas, validating data, and handling pre-existing invalid data. With ratcheting validation (allowing data to improve without breaking existing objects), we can now guide CRD authors toward conventions without breaking the world. API Governance in context FM: How does API Governance relate to SIG Architecture and API Machinery? JL: API Machinery provides the actual code and tools that people build APIs on. They don’t review APIs for storage, networking, scheduling, etc. SIG Architecture sets the overall system direction and works with API Machinery to ensure the system supports that direction. API Governance works with other SIGs building on that foundation to define conventions and patterns, ensuring consistent use of what API Machinery provides. FM: Thank you. That clarifies the flow. Going back to release cycles: do release phases — enhancements freeze, code freeze — change your workload? Or is API Governance mostly continuous? JL: We get involved in two places: design and implementation. Design involvement increases before enhancements freeze; implementation involvement increases before code freeze. However, many efforts span multiple releases, so there is always some design and implementation happening, even for work targeting future releases. Between those intense periods, we often have time to work on long-term design work. An anti-pattern we see is teams thinking about a large feature for months and then presenting it three weeks before enhancements freeze, saying, “Here is the design, please review.” For big changes with API impact, it’s much better to involve API Governance early. And there are good times in the cycle for this — between freezes — when people have bandwidth. That’s when long-term review work fits best. Getting involved FM: Clearly. Now, regarding team dynamics and new contributors: how can someone get involved in API Governance? What should they focus on? JL: It’s usually best to follow a specific change rather than trying to learn everything at once. Pick a small API change, perhaps one someone else is making or one you want to make, and observe the full process: design, implementation, review. High-bandwidth review — live discussion over video — is often very effective. If you’re making or following a change, ask whether there’s a time to go over the design or PR together. Observing those discussions is extremely instructive. Start with a small change. Then move to a bigger one. Then maybe a new API. That builds understanding of conventions as they are applied in practice. FM: Excellent. Any final comments, or anything we missed? JL: Yes… the reason we care so much about compatibility and stability is for our users. It’s easy for contributors to see those requirements as painful obstacles preventing cleanup or requiring tedious work… but users integrated with our system, and we made a promise to them: we want them to trust that we won’t break that contract. So even when it requires more work, moves slower, or involves duplication, we choose stability. We are not trying to be obstructive; we are trying to make life good for users. A lot of our questions focus on the future: you want to do something now… how will you evolve it later without breaking it? We assume we will know more in the future, and we want the design to leave room for that. We also assume we will make mistakes. The question then is: how do we leave ourselves avenues to improve while keeping compatibility promises? FM: Exactly. Jordan, thank you, I think we’ve covered everything. This has been an insightful view into the API Governance project and its role in the wider Kubernetes project. JL: Thank you.

-

Introducing Node Readiness Controller

on February 3, 2026 at 2:00 am

In the standard Kubernetes model, a node’s suitability for workloads hinges on a single binary “Ready” condition. However, in modern Kubernetes environments, nodes require complex infrastructure dependencies—such as network agents, storage drivers, GPU firmware, or custom health checks—to be fully operational before they can reliably host pods. Today, on behalf of the Kubernetes project, I am announcing the Node Readiness Controller. This project introduces a declarative system for managing node taints, extending the readiness guardrails during node bootstrapping beyond standard conditions. By dynamically managing taints based on custom health signals, the controller ensures that workloads are only placed on nodes that met all infrastructure-specific requirements. Why the Node Readiness Controller? Core Kubernetes Node “Ready” status is often insufficient for clusters with sophisticated bootstrapping requirements. Operators frequently struggle to ensure that specific DaemonSets or local services are healthy before a node enters the scheduling pool. The Node Readiness Controller fills this gap by allowing operators to define custom scheduling gates tailored to specific node groups. This enables you to enforce distinct readiness requirements across heterogeneous clusters, ensuring for example, that GPU equipped nodes only accept pods once specialized drivers are verified, while general purpose nodes follow a standard path. It provides three primary advantages: Custom Readiness Definitions: Define what ready means for your specific platform. Automated Taint Management: The controller automatically applies or removes node taints based on condition status, preventing pods from landing on unready infrastructure. Declarative Node Bootstrapping: Manage multi-step node initialization reliably, with a clear observability into the bootstrapping process. Core concepts and features The controller centers around the NodeReadinessRule (NRR) API, which allows you to define declarative gates for your nodes. Flexible enforcement modes The controller supports two distinct operational modes: Continuous enforcement Actively maintains the readiness guarantee throughout the node’s entire lifecycle. If a critical dependency (like a device driver) fails later, the node is immediately tainted to prevent new scheduling. Bootstrap-only enforcement Specifically for one-time initialization steps, such as pre-pulling heavy images or hardware provisioning. Once conditions are met, the controller marks the bootstrap as complete and stops monitoring that specific rule for the node. Condition reporting The controller reacts to Node Conditions rather than performing health checks itself. This decoupled design allows it to integrate seamlessly with other tools existing in the ecosystem as well as custom solutions: Node Problem Detector (NPD): Use existing NPD setups and custom scripts to report node health. Readiness Condition Reporter: A lightweight agent provided by the project that can be deployed to periodically check local HTTP endpoints and patch node conditions accordingly. Operational safety with dry run Deploying new readiness rules across a fleet carries inherent risk. To mitigate this, dry run mode allows operators to first simulate impact on the cluster. In this mode, the controller logs intended actions and updates the rule’s status to show affected nodes without applying actual taints, enabling safe validation before enforcement. Example: CNI bootstrapping The following NodeReadinessRule ensures a node remains unschedulable until its CNI agent is functional. The controller monitors a custom cniplugin.example.net/NetworkReady condition and only removes the readiness.k8s.io/acme.com/network-unavailable taint once the status is True. apiVersion: readiness.node.x-k8s.io/v1alpha1 kind: NodeReadinessRule metadata: name: network-readiness-rule spec: conditions: – type: “cniplugin.example.net/NetworkReady” requiredStatus: “True” taint: key: “readiness.k8s.io/acme.com/network-unavailable” effect: “NoSchedule” value: “pending” enforcementMode: “bootstrap-only” nodeSelector: matchLabels: node-role.kubernetes.io/worker: “” Demo: Getting involved The Node Readiness Controller is just getting started, with our initial releases out, and we are seeking community feedback to refine the roadmap. Following our productive Unconference discussions at KubeCon NA 2025, we are excited to continue the conversation in person. Join us at KubeCon + CloudNativeCon Europe 2026 for our maintainer track session: Addressing Non-Deterministic Scheduling: Introducing the Node Readiness Controller. In the meantime, you can contribute or track our progress here: GitHub: https://sigs.k8s.io/node-readiness-controller Slack: Join the conversation in #sig-node-readiness-controller Documentation: Getting Started

-

New Conversion from cgroup v1 CPU Shares to v2 CPU Weight

on January 30, 2026 at 4:00 pm

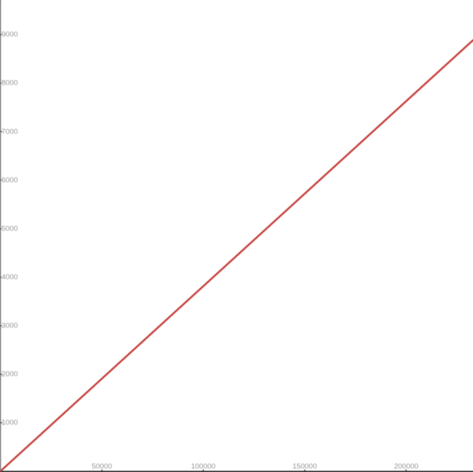

I’m excited to announce the implementation of an improved conversion formula from cgroup v1 CPU shares to cgroup v2 CPU weight. This enhancement addresses critical issues with CPU priority allocation for Kubernetes workloads when running on systems with cgroup v2. Background Kubernetes was originally designed with cgroup v1 in mind, where CPU shares were defined simply by assigning the container’s CPU requests in millicpu form. For example, a container requesting 1 CPU (1024m) would get (cpu.shares = 1024). After a while, cgroup v1 started being replaced by its successor, cgroup v2. In cgroup v2, the concept of CPU shares (which ranges from 2 to 262144, or from 2¹ to 2¹⁸) was replaced with CPU weight (which ranges from [1, 10000], or 10⁰ to 10⁴). With the transition to cgroup v2, KEP-2254 introduced a conversion formula to map cgroup v1 CPU shares to cgroup v2 CPU weight. The conversion formula was defined as: cpu.weight = (1 + ((cpu.shares – 2) * 9999) / 262142) This formula linearly maps values from [2¹, 2¹⁸] to [10⁰, 10⁴]. While this approach is simple, the linear mapping imposes a few significant problems and impacts both performance and configuration granularity. Problems with previous conversion formula The current conversion formula creates two major issues: 1. Reduced priority against non-Kubernetes workloads In cgroup v1, the default value for CPU shares is 1024, meaning a container requesting 1 CPU has equal priority with system processes that live outside of Kubernetes’ scope. However, in cgroup v2, the default CPU weight is 100, but the current formula converts 1 CPU (1024m) to only ≈39 weight – less than 40% of the default. Example: Container requesting 1 CPU (1024m) cgroup v1: cpu.shares = 1024 (equal to default) cgroup v2 (current): cpu.weight = 39 (much lower than default 100) This means that after moving to cgroup v2, Kubernetes (or OCI) workloads would de-facto reduce their CPU priority against non-Kubernetes processes. The problem can be severe for setups with many system daemons that run outside of Kubernetes’ scope and expect Kubernetes workloads to have priority, especially in situations of resource starvation. 2. Unmanageable granularity The current formula produces very low values for small CPU requests, limiting the ability to create sub-cgroups within containers for fine-grained resource distribution (which will possibly be much easier moving forward, see KEP #5474 for more info). Example: Container requesting 100m CPU cgroup v1: cpu.shares = 102 cgroup v2 (current): cpu.weight = 4 (too low for sub-cgroup configuration) With cgroup v1, requesting 100m CPU which led to 102 CPU shares was manageable in the sense that sub-cgroups could have been created inside the main container, assigning fine-grained CPU priorities for different groups of processes. With cgroup v2 however, having 4 shares is very hard to distribute between sub-cgroups since it’s not granular enough. With plans to allow writable cgroups for unprivileged containers, this becomes even more relevant. New conversion formula Description The new formula is more complicated, but does a much better job mapping between cgroup v1 CPU shares and cgroup v2 CPU weight: $$cpu.weight = \lceil 10^{(L^{2}/612 + 125L/612 – 7/34)} \rceil, \text{ where: } L = \log_2(cpu.shares)$$The idea is that this is a quadratic function to cross the following values: (2, 1): The minimum values for both ranges. (1024, 100): The default values for both ranges. (262144, 10000): The maximum values for both ranges. Visually, the new function looks as follows: And if you zoom in to the important part: The new formula is “close to linear”, yet it is carefully designed to map the ranges in a clever way so the three important points above would cross. How it solves the problems Better priority alignment: A container requesting 1 CPU (1024m) will now get a cpu.weight = 102. This value is close to cgroup v2’s default 100. This restores the intended priority relationship between Kubernetes workloads and system processes. Improved granularity: A container requesting 100m CPU will get cpu.weight = 17, (see here). Enables better fine-grained resource distribution within containers. Adoption and integration This change was implemented at the OCI layer. In other words, this is not implemented in Kubernetes itself; therefore the adoption of the new conversion formula depends solely on the OCI runtime adoption. For example: runc: The new formula is enabled from version 1.3.2. crun: The new formula is enabled from version 1.23. Impact on existing deployments Important: Some consumers may be affected if they assume the older linear conversion formula. Applications or monitoring tools that directly calculate expected CPU weight values based on the previous formula may need updates to account for the new quadratic conversion. This is particularly relevant for: Custom resource management tools that predict CPU weight values. Monitoring systems that validate or expect specific weight values. Applications that programmatically set or verify CPU weight values. The Kubernetes project recommends testing the new conversion formula in non-production environments before upgrading OCI runtimes to ensure compatibility with existing tooling. Where can I learn more? For those interested in this enhancement: Kubernetes GitHub Issue #131216 – Detailed technical analysis and examples, including discussions and reasoning for choosing the above formula. KEP-2254: cgroup v2 – Original cgroup v2 implementation in Kubernetes. Kubernetes cgroup documentation – Current resource management guidance. How do I get involved? For those interested in getting involved with Kubernetes node-level features, join the Kubernetes Node Special Interest Group. We always welcome new contributors and diverse perspectives on resource management challenges.

-

Ingress NGINX: Statement from the Kubernetes Steering and Security Response Committees

on January 29, 2026 at 12:00 am

In March 2026, Kubernetes will retire Ingress NGINX, a piece of critical infrastructure for about half of cloud native environments. The retirement of Ingress NGINX was announced for March 2026, after years of public warnings that the project was in dire need of contributors and maintainers. There will be no more releases for bug fixes, security patches, or any updates of any kind after the project is retired. This cannot be ignored, brushed off, or left until the last minute to address. We cannot overstate the severity of this situation or the importance of beginning migration to alternatives like Gateway API or one of the many third-party Ingress controllers immediately. To be abundantly clear: choosing to remain with Ingress NGINX after its retirement leaves you and your users vulnerable to attack. None of the available alternatives are direct drop-in replacements. This will require planning and engineering time. Half of you will be affected. You have two months left to prepare. Existing deployments will continue to work, so unless you proactively check, you may not know you are affected until you are compromised. In most cases, you can check to find out whether or not you rely on Ingress NGINX by running kubectl get pods –all-namespaces –selector app.kubernetes.io/name=ingress-nginx with cluster administrator permissions. Despite its broad appeal and widespread use by companies of all sizes, and repeated calls for help from the maintainers, the Ingress NGINX project never received the contributors it so desperately needed. According to internal Datadog research, about 50% of cloud native environments currently rely on this tool, and yet for the last several years, it has been maintained solely by one or two people working in their free time. Without sufficient staffing to maintain the tool to a standard both ourselves and our users would consider secure, the responsible choice is to wind it down and refocus efforts on modern alternatives like Gateway API. We did not make this decision lightly; as inconvenient as it is now, doing so is necessary for the safety of all users and the ecosystem as a whole. Unfortunately, the flexibility Ingress NGINX was designed with, that was once a boon, has become a burden that cannot be resolved. With the technical debt that has piled up, and fundamental design decisions that exacerbate security flaws, it is no longer reasonable or even possible to continue maintaining the tool even if resources did materialize. We issue this statement together to reinforce the scale of this change and the potential for serious risk to a significant percentage of Kubernetes users if this issue is ignored. It is imperative that you check your clusters now. If you are reliant on Ingress NGINX, you must begin planning for migration. Thank you, Kubernetes Steering Committee Kubernetes Security Response Committee